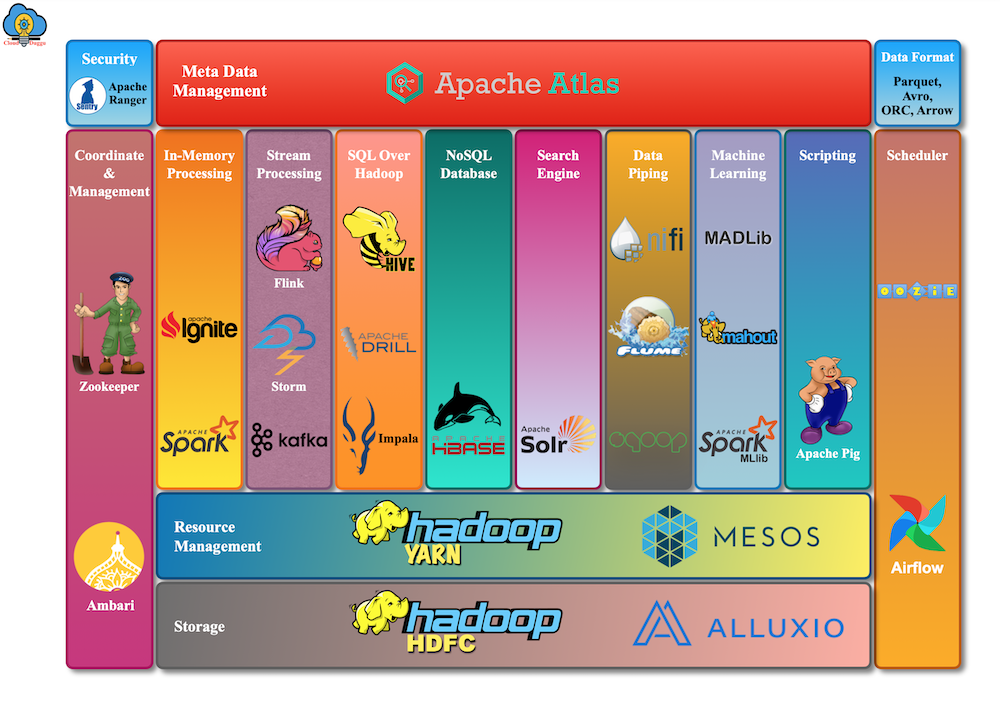

Microsoft Ignite Microsoft’s annual gathering of technology leaders and practitioners delivered as a digital event experience this March. This video will walk beginners through the basics of Hadoop – from the early stages of the client-server model through to the current Hadoop ecosystem. Apache Hadoop is a framework for running applications on large cluster built of commodity hardware. The Hadoop framework transparently provides applications both reliability and data motion. Hadoop implements a computational paradigm named Map/Reduce, where the application is divided into many small fragments of work, each of which may be executed or re-executed on any. Apache Parquet is a columnar storage format available to any project in the Hadoop ecosystem, regardless of the choice of data processing framework, data model or programming language.

Spark uses Hadoop client libraries for HDFS and YARN. Starting in version Spark 1.4, the project packages “Hadoop free” builds that lets you more easily connect a single Spark binary to any Hadoop version. To use these builds, you need to modify SPARK_DIST_CLASSPATH to include Hadoop’s package jars. The most convenient place to do this is by adding an entry in conf/spark-env.sh.

Apache Hadoop Pdf

This page describes how to connect Spark to Hadoop for different types of distributions.

For Apache distributions, you can use Hadoop’s ‘classpath’ command. For instance:

Apache Hadoop Mapreduce

To run the Hadoop free build of Spark on Kubernetes, the executor image must have the appropriate version of Hadoop binaries and the correct SPARK_DIST_CLASSPATH value set. See the example below for the relevant changes needed in the executor Dockerfile: